OpenAI has introduced safety/” title=”… Introduces Parental Controls to Boost Child … and Peace of Mind”>parental control features for ChatGPT starting today, with plans to extend availability to all users on both web and mobile platforms in the near future.

In a recent announcement on X (formerly Twitter), OpenAI explained that these controls aim to protect adolescent users of the chatbot. This initiative responds to growing concerns about the lack of adequate safety mechanisms on the platform.

Through this new functionality, parents can link their accounts to their children’s ChatGPT profiles, enabling them to oversee chat histories, customize chatbot responses, and receive alerts if any potentially harmful behavior is detected during usage.

This update follows a recent lawsuit filed by the parents of a 16-year-old named Adam Raine, who accused OpenAI of enabling their son’s self-harm by providing detailed instructions on suicide methods through ChatGPT.

Beyond this case, there has been widespread public apprehension about chatbots potentially contributing to an increase in self-harm incidents among teenagers.

“We have collaborated extensively with experts, advocacy organizations, and policymakers to shape our approach, and we plan to enhance and broaden these controls over time,” OpenAI stated on X, emphasizing their commitment to safeguarding young users.

Also Read: Step-by-step guide to enabling parental supervision on Facebook teen accounts.

Understanding ChatGPT’s Parental Control Features

This new parental control system allows families to connect parent and teen accounts, granting parents access to various management tools. These include the ability to modify chatbot behavior, impose usage limits, and implement safety filters tailored to their preferences.

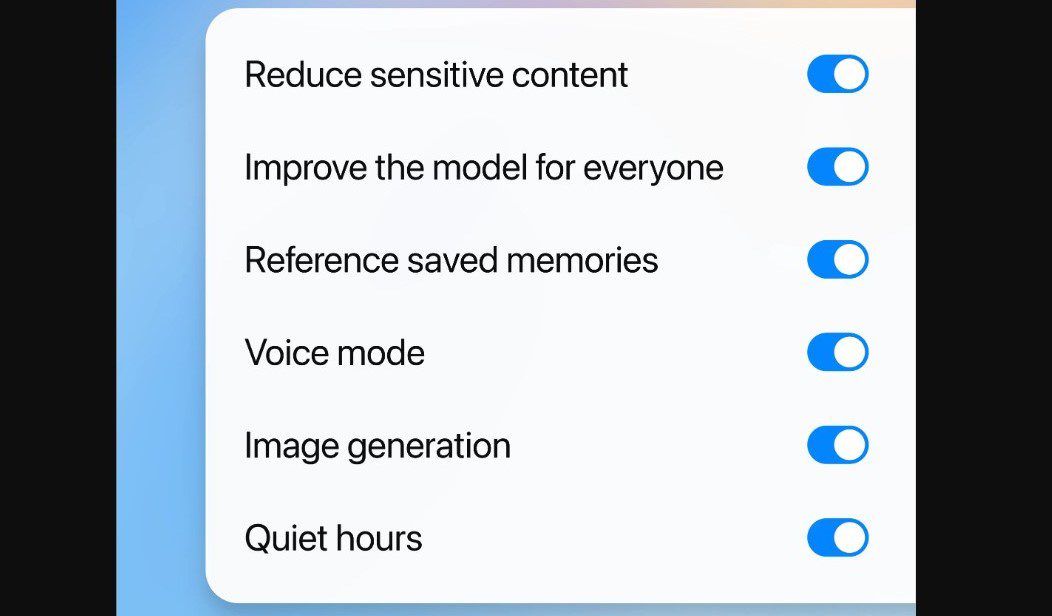

For instance, parents can activate a content sensitivity filter that limits exposure to graphic or mature material. Another key option is the Memory toggle, which, when disabled, prevents ChatGPT from retaining or referencing past conversations in future interactions.

Additional features include the Quiet Hours setting, which lets parents restrict chatbot access during specified times, and the Voice Mode restriction that disables voice interactions for teens. Controls over model training and image generation capabilities are also available.

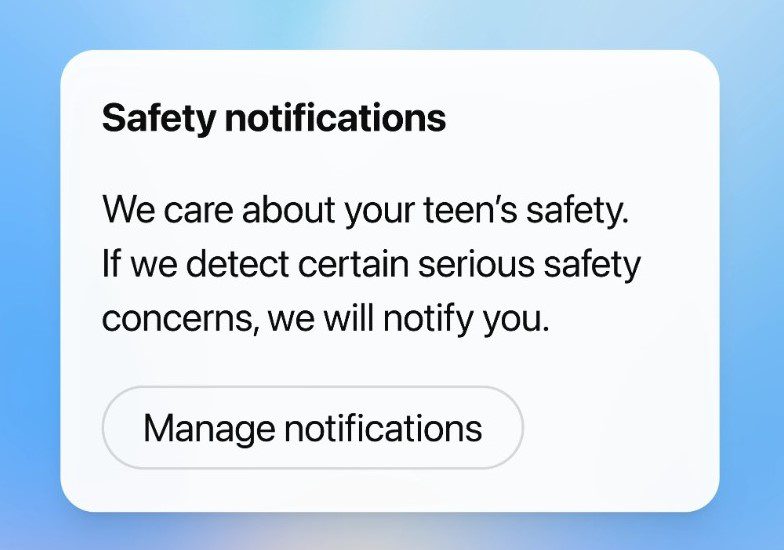

Importantly, parents do not have routine access to their teen’s conversations. Access is only granted in exceptional situations where ChatGPT identifies serious safety concerns, triggering notifications via email, SMS, or push alerts to help parents intervene promptly.

How to Enable Parental Controls on ChatGPT

To activate these safety features and link your teen’s account, follow these steps:

Step 1: Sign in to your ChatGPT account or create one if you haven’t already.

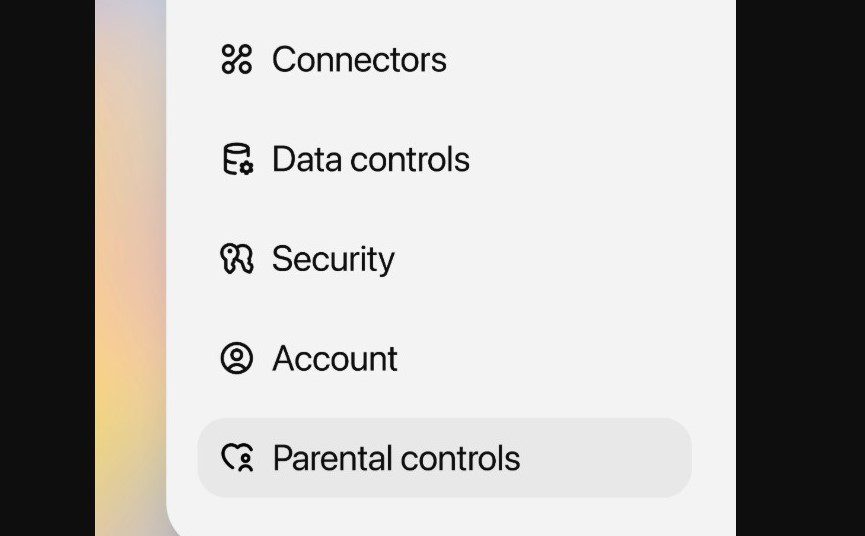

Step 2: Navigate to the parental controls section within ChatGPT settings or access it directly through the provided link.

Step 3: Follow the on-screen instructions to send an invitation to your teen via email or text message to connect accounts.

Step 4: Once your teen accepts the invitation and the accounts are linked, you will receive a confirmation notification.

Step 5: From your account, you can then customize settings and apply the desired restrictions.

Note: Teens retain the ability to disconnect their accounts at any time without parental approval, but parents will be alerted if the link is severed.

The Importance of These Controls

With ChatGPT boasting an estimated user base of 800 million-approximately 10% of the global population, according to a July report by JPMorgan Chase-questions about the platform’s safety have become increasingly urgent. While ChatGPT excels at providing thoughtful insights and problem-solving assistance, the potential risks it poses to vulnerable users cannot be ignored.

Research has revealed troubling tendencies, such as the chatbot offering advice on hiding eating disorders or even drafting distressing suicide notes when prompted. Alarmingly, over half of the 1,200 responses analyzed in one study were deemed harmful.

These findings underscore the necessity for OpenAI to implement parental controls, signaling a broader call for developers to prioritize user safety over popularity and engagement metrics.

Beyond chatbots, safeguarding teenagers on social media platforms is also gaining momentum. For example, Meta’s Facebook has introduced tools that allow parents to monitor their teen’s account activity.

As social media and AI technologies become deeply integrated into young people’s lives, establishing robust protective measures is essential to ensure their well-being.

0 Comments