South Africa is currently experiencing a dramatic escalation in scams involving artificial intelligence (AI) and deepfake technology, with reported incidents soaring by 1,200% within just one year. This alarming trend has impacted a wide array of industries, including banking, fintech, insurance, retail, media, and government services.

Deepfakes refer to digitally manipulated content-such as videos, images, or audio-that AI algorithms generate to convincingly replicate real individuals. These fabrications can imitate a person’s facial expressions, voice, and even behavioral nuances with striking realism.

These synthetic creations stem from machine learning, a branch of AI that analyzes thousands of authentic photos, videos, and audio clips of a subject to learn their unique characteristics. The system then synthesizes this data to produce entirely fabricated yet believable content.

For instance, a deepfake video might falsely depict a political leader making statements they never uttered. Similarly, audio deepfakes can mimic someone’s voice to manipulate others into divulging sensitive information. Presently, such deceptive content is exploited to spread falsehoods, tarnish reputations, steal identities, and bypass security systems reliant on biometric verification.

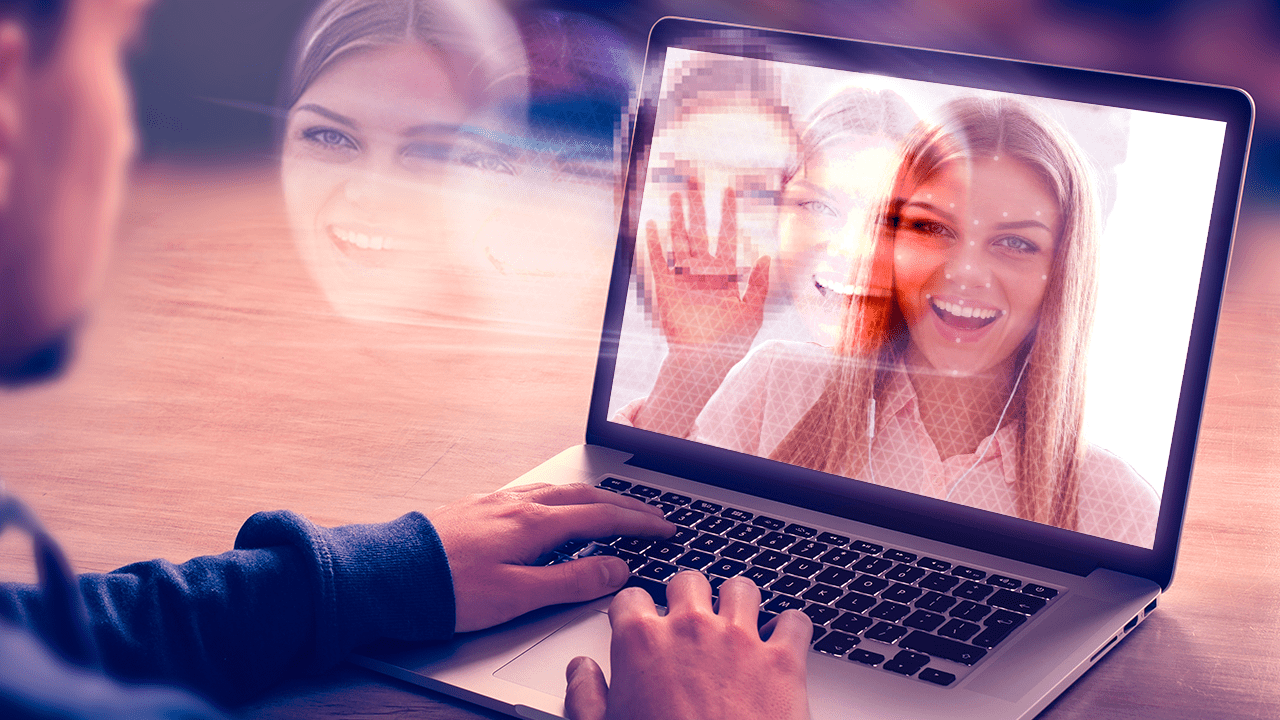

The financial sector has been particularly vulnerable to these AI-driven frauds. Banks and fintech companies are grappling with scams such as SIM swapping, fraudulent account openings, and impersonation schemes. In many cases, AI-powered voice cloning is used to replicate bank employees’ voices, deceiving victims into revealing confidential data or authorizing unauthorized transactions.

First National Bank (FNB) of South Africa recently revealed that its customers have been targeted by deepfake scams. Fraudsters impersonated bank representatives and even relatives of clients through AI-generated voice, video, and text messages. These victims were coerced into urgently transferring funds, with some suffering significant financial losses before recognizing the deception.

Experts attribute this surge in fraud to South Africa’s extensive digital connectivity. With over 50 million internet users and approximately 124 million active mobile devices, alongside 27 million social media accounts, the country presents a vast landscape for cybercriminals to exploit.

AI Fraud Extends Across Multiple Industries

AI-enabled deception is not confined to banking alone. Insurance providers have reported sophisticated fraudulent claims involving doctored videos and falsified medical documentation. These convincing forgeries often evade traditional verification methods, compelling insurers to adopt advanced claim authentication technologies.

The retail sector is also under threat. Cybercriminals create counterfeit online storefronts and deceptive advertising campaigns that appear authentic, redirecting shoppers to bogus websites where they pay for nonexistent products. This undermines both e-commerce platforms and brick-and-mortar stores that depend on digital marketing.

Media organizations have encountered manipulated footage and synthetic voices used to disseminate false information and defame individuals. Likewise, government agencies have been targeted with forged documents and counterfeit identification data, enabling unauthorized access to official records.

Related read: Navigating the new frontier of deception: The impact of deepfakes and misinformation on business (By Anna Collard)

In response to the growing threat, enhanced detection and prevention technologies are being deployed. Real-time anomaly detection systems monitor for unusual activities, while biometric verification combined with liveness detection helps confirm the genuineness of faces and voices.

Techniques such as device fingerprinting track suspicious behavior across multiple gadgets, and behavioral analytics identify fraudulent patterns before damage occurs. Alongside these technological defenses, raising public awareness remains a critical component in thwarting scams.

Strengthening Regulations and Building Resilience

Industry leaders are pushing for more stringent legislation to combat AI-facilitated crimes. Proposals include laws specifically targeting the malicious use of deepfake technology, coupled with harsher penalties for perpetrators. Regulatory frameworks are being updated to integrate AI tools, aiming to establish a unified approach for detecting and prosecuting AI-related fraud across sectors.

The South African government recognizes the urgent need to address AI misuse and is advocating for immediate national readiness. Collaborative efforts with academic institutions are underway to equip engineering students with skills to develop systems capable of identifying deepfakes and preventing AI-driven cyberattacks.

Moreover, interdisciplinary initiatives involving social scientists and ethicists are being launched to formulate policies that safeguard citizen privacy and uphold ethical standards in AI deployment.

Financial institutions and fintech companies are urged to intensify consumer education efforts. Customers should be encouraged to verify the identity of callers, scrutinize messages carefully, and exercise caution before authorizing any transactions.

The swift proliferation of AI-powered scams underscores that no industry is immune. As digital engagement grows, businesses and regulators will face mounting pressure to outpace criminals leveraging cutting-edge technologies.

Ultimately, a collaborative approach involving corporations, government bodies, and researchers is essential. By advancing technological defenses, enhancing regulatory measures, and promoting consumer vigilance, South Africa aims to safeguard its digital economy and maintain public trust.

0 Comments